Assessment of competence in local anaesthetic thoracoscopy: development and validity investigation of a new assessment tool

Introduction

Diagnostic thoracoscopy in the management of exudative pleural effusion of unknown origin can be performed either as video-assisted thoracoscopy surgery in general anaesthesia or as local anaesthetic thoracoscopy (LAT) under conscious sedation. For LAT (formerly called medical thoracoscopy), the semi-rigid thoracoscope with increased maneuverability has replaced the rigid thoracoscope in many institutions and was found to be a safe and simple procedure with acceptable sensitivity for malignancy (1,2). LAT allows both to take biopsies and perform pleurodesis and is one of the techniques with the highest diagnostic yield in cytology negative, exudative pleural effusion. It is increasingly being used by respiratory physicians (3,4) but certain recommendations must be followed to maintain and enhance safety of the procedure (5) and there is an increasing need to train respiratory physicians in LAT (6). However, in contrast to other technical procedures for lung cancer diagnostics such as endoscopic ultrasound (7), the dissemination of LAT has occurred without consensus on how operators should be trained and how their competences should be assessed. The current training approach to achieve the competences is through the traditional apprenticeship method. The American College of Chest Physicians stated that aside from extensive knowledge of pleural and thoracic anatomy, ample experience is also a requirement. To attain basic competency, physicians are recommended to perform at least 20 procedures in a supervised setting and 10 procedures to maintain competency (8). However, doctors learn at different paces and clinical training opportunities might be scarce in some departments (9). In place of training on patients and sheer (arbitrary) volume requirements to reach competency, front-line medical educators recommend to shift to competency-based assessment following mastery learning in a simulated environment (10). Defensible and sound assessment is the cornerstone for mastery learning and is increasingly being applied in medical education (11,12).

There is a paucity in the literature on the availability of evidence-based assessment tools for LAT. On this background we decided to develop an assessment tool for competences in LAT and to investigate its validity.

The aims of this study were:

- To develop an assessment tool for LAT;

- To explore validity evidence of the assessment tool in a simulated-based setting;

- To establish a pass/fail standard that can be used to ensure competence in a mastery learning training program.

We present the following article in accordance with the MDAR checklist (available at https://dx.doi.org/10.21037/jtd-20-3560).

Methods

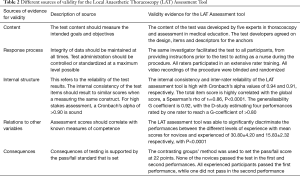

The study was conducted prospectively, and performed at the Copenhagen Academy for Medical Education and Simulation from September 2018 to June 2019. Validity investigation of the assessment tool was based on the unified framework proposed by Messick, drawing evidence of validity from five sources: content, response process, internal structure, relations to other variables, and consequences (13). The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). It is an educational study that did not include patient participation and therefore did not require ethical approval in accordance with Danish law. Informed consent to participate in the study was obtained.

Test development

The content of the assessment tool was developed by a collaboration between four respiratory physicians with expertise in LAT (PFC, UB, NM, and NR) and a professor in medical education with expertise regarding assessment tools (LK). This was based on the important competences that should be considered when assessing a trainee performing LAT: sterile approach, instillation of local anesthesia, skin incision, insertion of trocar, insertion of videoscope, systematic exploration of the thoracic cavity, location of possible tumors, biopsy of these, avoiding lung lesions, and insertion of chest tube (Figure 1). Once the test content and competences were agreed upon, a pilot rating was initiated to ensure its usability.

The simulated set-up

A thorax/lung model was developed and built in silicone for the study providing the possibility to perform a completely standardized LAT procedure from administration of local anaesthesia to inspection of the thoracic cavity and biopsy (Figure 2). Five predetermined tumors were distributed in the pleural cavity. All necessary equipment was available including the thoracoscope (LTF-160 and EVIS Exera II, Olympus, Tokyo, Japan).

Participants

Two groups of participants were enrolled. The inclusion criteria for the novice group were junior doctors who are in their clinical clerkship, with no experience in LAT, flexible bronchoscopy, or laparoscopy. For the experienced group, the inclusion criteria include respiratory consultants having performed more than 20 LAT procedures.

Preprocedural preparation

The participants were provided with written material outlining the stepwise approach to the procedure (14) as well as oral information on the set-up given by the primary investigator (LJN).

Video recording

The procedures were video recorded with two cameras—one focused on the hands of the operator, while the other (Medicapture USB200 device connected to the Olympus endoscopic tower), captured images from the screen. The videos were processed and merged using the VSDC Free Video Editor 3.3.0.394 (Flash-Integro LLC). These were saved in an online hosting service (Vimeo, Inc., New York, USA) and were embedded in an online rating platform (Research Electronic Data Capture software [REDCap (15)], hosted at the Capital Region of Denmark). All videos were anonymized to allow for mutual blinded rating.

The simulated procedure

Prior to the test, the participants performed a warm-up exercise including the insertion of the thoracoscope and exploration of the “cavity” using a plastic box specially designed by an engineer (MBSS) for endoscopy practice. The participants were instructed to find four letters (T, E, S, T) that were strategically placed inside the box. Thereafter, the participants performed LAT, starting from instillation of local anesthesia, skin incision and insertion of trocar, insertion of scope and systematic exploration of the thoracic cavity, location and biopsy of tumors, and ending with insertion of the chest tube. They had to perform two procedures consecutively, with 5 minutes rest in between. LJN assisted as a nurse during all procedures, but did not offer any kind of advice to the doctors.

Pilot rating and rater calibration

Four consultants with extensive experience in LAT were invited to assess all video performances (NR, NM, PFC, UB). An initial pilot rating of three movies was carried out by two of the raters (PFC, UB) to assess whether they have understood how the assessment tool is being used. After assessing each video individually, the raters compared their scoring and discussed any discrepancies. The test items and anchor descriptors for 1, 3 and 5 of the rating scale were reworded as a result of the discussions. A final rater training followed where all four raters assessed the same three videos using the revised tool. A rater instruction was also sent out to all raters to guide them through the process.

Ratings

Individual links were sent to each of the four raters through REDCap. Access to each other’s rating profile was not possible, and the order of the 34 videos was individually randomised. All 136 assessment were automatically stored in REDCap and later transferred to SPSS Statistics version 25.0 (IBM, NY, USA) for statistical analysis.

Statistical analysis

Cronbach’s alpha was calculated to explore internal consistency of the test items and interrater reliability. The correlation between the total item score and the global rating was calculated using Spearman’s rank correlation coefficient (16). Pearson’s correlation coefficient was used to measure the correlation between the first and second performances, i.e., test-retest reliability. A generalisability study (G) and decision study (D) were performed to explore generalisability of the LAT assessment tool and the most optimal combinations of assessed procedures and raters (17). Differences in performance scores between the two groups were tested with independent samples t-test. We used the contrasting groups’ method to determine a pass/fail standard, which is defined as the intercept between the normalised distribution of performance scores of the novices and experienced participants (18). All statistical analysis was performed using SPSS.

Results

Ten novice and eight experienced participants were enrolled in the study. However, the videos of one novice were corrupted and therefore excluded. In consequence, 9 novice participants were included. Table 1 presents characteristics of the participants. All participants performed two consecutive procedures, generating a total of 34 video recordings that were rated by four raters resulting in 136 assessments for analysis. Evidence of validity from the five sources are summarised in Table 2.

Full table

Full table

Content

The Local Anaesthetic Thoracoscopy Assessment Tool consists of eight procedural items and a global rating scale (Figure 2). The item regarding sterile technique was added after rater training. The global rating scale was included to ensure that the included items covered all important aspects of the procedure.

Response process

Standardized data collection was ensured using the same researcher (LJN) to conduct the data collection throughout the study. Furthermore, the simulation-based setting provided a controlled environment for testing without the difference in procedural difficulty that is unavoidable in a clinical setting. Finally, the response process was also optimized by providing rater training and calibration before the actual rating of performances started.

Internal structure

The internal consistency reliability of the test items was high with a Cronbach’s alpha =0.94, while the inter-rater reliability was 0.91. The total item score to global score analysis showed strong, significant correlation with a Spearman’s rho of rs=0.86, P<0.001. The participants’ first performance correlated to their second performance with a Pearson r of 0.93, P<0.001 (Figure 3).

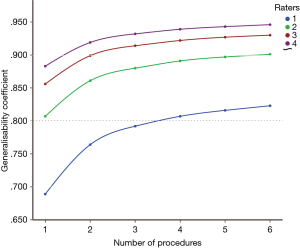

A generalisability study determined a generalisability G-coefficient of 0.92. The D-study estimated that four performances assessed by one rater is needed to be sufficiently reliable (G-coefficient of >0.80). If there is only one video-recorded performance of the procedure, two raters are needed to reach an acceptable G-coefficient (Figure 4).

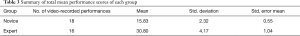

Relations to other variables

The LAT assessment tool was able to discriminate between the two groups in both performances. The experienced group performed significantly better with a mean score of 30.8±4.2, while the mean score for the novice group was 15.8±2.3, P<0.001. Table 3 present a summary of the performances of both groups.

Full table

Consequences

The pass/fail standard as determined by the contrasting groups’ method was 22 points (Figure 5). None of the novices passed the test in either of the two performances. All experienced passed the test in the first performance, while one failed in the second performance (Figure 6).

Discussion

We designed a procedure-specific assessment tool to assess competences in LAT and established validity evidence based on five sources to support its intended use, that is to assess competence in performing LAT. A pass/fail standard was also established to ensure that trainees have acquired the necessary skills to competently perform the procedure. This is the first study to describe an assessment tool for LAT, with extensive validity and a pass/fail standard to ensure proficiency.

Focused training and assessment is needed in LAT. Simulation is a safe and effective training ground for the acquisition of procedural skills and competences, as well as an ideal environment for structured assessment. In this study, we report a high validity in the interpretation of performance scores based on the newly developed LAT assessment tool.

Validity of the assessment tool

Validity evidence for content was gathered by involving a group of experts within pulmonary medicine and medical education. One of the ways to devise test items is to involve experts with relevant knowledge and experience, to ensure that the content is representative of the construct that is intended to be investigated (16). This could be in a group discussion or using a more systematic approach such as the Delphi method as performed in other studies (19,20). The expert group included important steps when performing LAT. Being set in a simulation-based environment, sterile technique was not initially considered, however after pilot rating, the raters highlighted the importance of maintaining sterility during the procedure, and added this as an additional item. Additionally, a global rating scale was added to allow us to test the completeness of the devised items.

We optimized the response process to minimize any biases during the testing and assessment processes. Data collection was performed in a controlled and standardised environment using the same investigator who also acted as the nurse in all procedures. Furthermore, meticulous rater training was performed to improve reliability (21,22).

The internal structure of the LAT assessment tool was reflected by a high internal consistency reliability, indicating that the items correlate to the purpose of the assessment, which is competency in LAT. This corresponds with high stakes summative assessments such as certification or licensure examinations, where a reliability >0.90 is preferred (23). Additionally, the total item score correlated significantly with the global rating score, indicating that all items represent the same construct, that the items cover all important parts of the procedure, and that none should be discarded. Performing the procedure twice and being assessed by four raters increases the reliability, as reflected by a high G-coefficient. However, the D-study showed that to reach an acceptable reliability of >0.80, four video-recorded performances are needed if there is only one rater. Alternatively, one performance is needed when assessed by two raters to reach sufficient reliability. These approaches are more feasible, less expensive, and easier to administer than our experimental use of four raters.

The LAT assessment tool was able to significantly discriminate between the performances of the novices and experienced participants, indicating its capability to stratify different levels of experience. This strongly supports evidence for relations to other variables. The experienced group scored superiorly as compared to the novice group. None of the novice participants passed the test, however their performance scores improved in the second procedure as compared to the first attempt, signifying a learning effect. The novices were all doctors in their first year of clerkship who volunteered to participate despite having no experience in the procedure. The interest to learn was seen in their performances, from struggling to manipulate the thoracoscope in the first attempt to an evident improvement in the second performance. All the experienced participants passed the test in their first performance, however as shown in the test scores, some of them performed inferiorly with one out of nine failing in the second performance. One of the reasons could be that the experienced participants were highly competent and had already reached the plateau of the learning curve. Furthermore, the experienced participants were not accustomed to the simulation-based setting and consequently, were unable to apply the habits and tricks that they would do during real LAT procedures. Another reason could also be the differences in practices regarding LAT, including the continued use of rigid thoracoscopes in some institutions. These findings are consistent with other simulation-based studies, where novices perform towards achieving a higher level of competency with training, while experienced or experts tend to underperform (21,24).

Establishing a pass/fail standard to define competence is a strength of the study. Standard setting serves as a benchmark or cutpoint between those who perform competently and those who do not (25). The changing education landscape towards competency-based education is accompanied by a demand for assessment methods to include standards for competence that will help with consequence decisions. We used the contrasting groups’ method to define the pass/fail standard for LAT in a simulation-based setting, a method that is well-accepted and is commonly used to establish standards for procedural skills (26).

The small sample size in this study is a limitation. The enrolment of more participants in each group might have given the study more power, increasing the reliability and generalisability of the results (27). Another limitation is the inclusion of novice participants who do not have any experience in LAT. Comparing novices to experts is one of the most common methods in validation studies but can inflate the reliability of the assessment (10,28). Nevertheless, the participants were not complete novices (ex. students) but were doctors during their first year of clerkship, and were assumed to have some skills and experiences in performing technical procedures. One way to minimise this spectrum bias is to include participants who are representative of the intended target population, i.e., respiratory physicians in different stages of the thoracoscopy education. The model used in the study did not allow ultrasonography to detect pleural effusion, which is a limitation since the use of pleural ultrasonography facilitates the insertion of trocar. However, this simulation set-up is directed towards novice trainees to train the critical procedural steps before progressing on to the next stages that could include management and evaluation of complications such as bleeding, unexpected septation and etc.

Future implications

The newly developed assessment tool in LAT is targeted towards a standardized performance of thoracoscopy and this procedural specificity makes it easier, more reliable, and more informative to use for feedback and certification. However, variations in how the procedure is performed (e.g., using ultrasound to ensure pneumothorax or using the camera during trocar insertion) can make it necessary to make smaller revisions of the tool to fit the local context. It can be used in a simulation-based environment and possibly also for assessment of supervised procedures on patients. Simulation with mastery learning provides an excellent opportunity to learn and train until a certain level of competency before supervised training with patients. Several studies have reported the implementation of simulation-based training programmes in respiratory medicine, including summative assessment as end-of-course assessment or certification (29-31). Although more research is needed such as its use in the clinical environment, the study is an important first step. We hope to implement a LAT simulation-based training programme for novices to train the different steps and skills until they pass the pass/fail standard towards competency. The overall goal is to increase the quality of LAT to improve the diagnosis and staging of patients with malignant pleural disease for better prognosis.

Conclusions

The newly developed local anaesthetic assessment tool is an objective, procedure-specific assessment tool with solid evidence of validity. It can be used to provide structured, formative feedback for trainees (during simulation-based training), as a summative assessment at the end of the course, and possibly for certification before supervised practice in the clinical environment.

Acknowledgments

Funding: None.

Footnote

Reporting Checklist: The authors have completed the MDAR checklist. Available at https://dx.doi.org/10.21037/jtd-20-3560

Data Sharing Statement: Available at https://dx.doi.org/10.21037/jtd-20-3560

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://dx.doi.org/10.21037/jtd-20-3560). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). It is an educational study that did not include patient participation and therefore did not require ethical approval in accordance with Danish law. Informed consent to participate in the study was obtained.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Rahman NM, Ali NJ, Brown G, et al. Local anaesthetic thoracoscopy: British Thoracic Society Pleural Disease Guideline 2010. Thorax. 2010;65:ii54-60. [Crossref] [PubMed]

- Willendrup F, Bodtger U, Colella S, et al. Diagnostic accuracy and safety of semirigid thoracoscopy in exudative pleural effusions in Denmark. J Bronchology Interv Pulmonol 2014;21:215-9. [Crossref] [PubMed]

- Hallifax RJ, Corcoran JP, Psallidas I, et al. Medical thoracoscopy: Survey of current practice-How successful are medical thoracoscopists at predicting malignancy? Respirology 2016;21:958-60. [Crossref] [PubMed]

- Roberts ME, Neville E, Berrisford RG, et al. Management of a malignant pleural effusion: British Thoracic Society pleural disease guideline 2010. Thorax 2010;65:ii32-ii40. [Crossref] [PubMed]

- Rodríguez-Panadero F. Medical thoracoscopy. Respiration 2008;76:363-72. [Crossref] [PubMed]

- Nayahangan LJ, Clementsen PF, Paltved C, et al. Identifying Technical Procedures in Pulmonary Medicine That Should Be Integrated in a Simulation-Based Curriculum: A National General Needs Assessment. Respiration 2016;91:517-22. [Crossref] [PubMed]

- Konge L, Colella S, Vilmann P, et al. How to learn and to perform endoscopic ultrasound and endobronchial ultrasound for lung cancer staging: A structured guide and review. Endosc Ultrasound 2015;4:4-9. [Crossref] [PubMed]

- Ernst A, Silvestri GA, Johnstone D. Interventional pulmonary procedures: Guidelines from the American College of Chest Physicians. Chest 2003;123:1693-717. [Crossref] [PubMed]

- Scally G, Donaldson LJ. Clinical governance and the drive for quality improvement in the new NHS in England. BMJ 1998;317:61-5. [Crossref] [PubMed]

- Cook DA, Hatala R. Validation of educational assessments: a primer for simulation and beyond. Adv Simul (Lond) 2016;1:31. [Crossref] [PubMed]

- Lineberry M, Park YS, Cook DA, et al. Making the case for mastery learning assessments: Key issues in validation and justification. Acad Med 2015;90:1445-50. [Crossref] [PubMed]

- Yudkowsky R, Park YS, Downing SM. editors. Assessment in health professions education. Routledge. 2019.

- Messick S. 1989: Validity. In Linn RL, editor. Educational measurement. New York: Macmillan, 1989:13-103.

- Loddenkemper R, Lee P, Noppen M, et al. Medical thoracoscopy/pleuroscopy: step by step. Breathe 2011;8:156-67. [Crossref]

- Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377-81. [Crossref] [PubMed]

- Streiner DL, Norman GR, Cairney J. Health measurement scales: a practical guide to their development and use. Devising Items. 5th ed. Oxford: Oxford University Press, 2015:19-37.

- Bloch R, Norman G. Generalizability theory for the perplexed: a practical introduction and guide: AMEE Guide No. 68. Med Teach 2012;34:960-92. [Crossref] [PubMed]

- Yudkowsky R, Park YS, Lineberry M, et al. Setting mastery learning standards. Acad Med 2015;90:1495-500. [Crossref] [PubMed]

- Strøm M, Lönn L, Bech B, et al. Assessment of competence in EVAR procedures: a novel rating scale developed by the Delphi technique. Eur J Vasc Endovasc Surg 2017;54:34-41. [Crossref] [PubMed]

- Savran MM, Clementsen PF, Annema JT, et al. Development and validation of a theoretical test in endosonography for pulmonary diseases. Respiration 2014;88:67-73. [Crossref] [PubMed]

- Rasmussen KMB, Hertz P, Laursen CB, et al. Ensuring Basic Competence in Thoracentesis. Respiration 2019;97:463-71. [Crossref] [PubMed]

- Robertson RL, Vergis A, Gillman LM, et al. Effect of rater training on the reliability of technical skill assessments: a randomized controlled trial. Can J Surg 2018;61:15917. [Crossref] [PubMed]

- Downing SM. Reliability: on the reproducibility of assessment data. Med Educ 2004;38:1006-12. [Crossref] [PubMed]

- Dyre L, Norgaard LN, Tabor A, et al. Collecting Validity Evidence for the Assessment of Mastery Learning in Simulation-Based Ultrasound Training. Ultraschall Med 2016;37:386-92. [Crossref] [PubMed]

- Norcini JJ. Setting standards on educational tests. Med Educ 2003;37:464-69. [Crossref] [PubMed]

- Goldenberg MG, Garbens A, Szasz P, et al. Systematic review to establish absolute standards for technical performance in surgery. Br J Surg 2017;104:13-21. [Crossref] [PubMed]

- Cook DA, Hatala R. Got power? A systematic review of sample size adequacy in health professions education research. Adv Health Sci Educ Theory Pract 2015;20:73-83. [Crossref] [PubMed]

- Norman G. Data dredging, salami-slicing, and other successful strategies to ensure rejection: twelve tips on how to not get your paper published. Adv Health Sci Educ Theory Pract 2014;19:1-5. [Crossref] [PubMed]

- Konge L, Vilmann P, Clementsen P, et al. Reliable and valid assessment of competence in endoscopic ultrasonography and fine-needle aspiration for mediastinal staging of non-small cell lung cancer. Endoscopy 2012;44:928-33. [Crossref] [PubMed]

- Konge L, Arendrup H, Von Buchwald C, et al. Using performance in multiple simulated scenarios to assess bronchoscopy skills. Respiration 2011;81:483-90. [Crossref] [PubMed]

- Konge L, Clementsen P, Larsen KR, et al. Establishing pass/fail criteria for bronchoscopy performance. Respiration 2012;83:140-46. [Crossref] [PubMed]